Back in 2003, Nick Bostrom of the philosophy faculty at Oxford published an overly sensationalised paper in the Philosophical Quarterly, under the title "Are We Living In a Simulation?" The paper and the argument it makes sounds like something straight out of science fiction but some commentators are trying to give the impression that the "simulation hypothesis" is a serious and credible scientific model.

Up until now I haven't discussed the paper or thought too much of it, but that still hasn't stopped me from hearing about it ad nauseam. Bostrom essentially presents a statistical argument to the effect that we're living in a simulated reality, rather than a real one, the argument goes something like this:

2. Human beings will survive to a posthuman stage where the technology to run ancestor simulations exist and becomes sufficiently widespread.

3. Posthuman civilizations will be interested in running ancestor-simulations of their evolutionary history.

If all three of the premises assumed in the argument are true, then the overwhelming majority of observers with experiences like ours will be simulated observers, living in a simulated reality rather than real observers existing in the real world. We should assume that we're typical observers in the world ensemble and therefore we're overwhelming more likely to be living in a simulated universe rather than the real one.

So the fraction of observers with human-type experiences living in a simulated world is

Where $f_{p}$ is the fraction of post-human civilizations, $\bar{N}$ is the average number of ancestor-simulations run by posthuman civilizations and $\bar{H}$ is the average number of people living in a world before it reaches a posthuman stage.

Bostrom argues that since $\bar{N}$ will be extremely large as a result of the expected advances that will be made in computing power, it essentially doesn't matter what the value of $\bar{H}$ is because $f \approx 1$ unless $f _{p} \approx 0$ or the number of posthuman civilizations interested in running ancestor-simulations approaches zero.

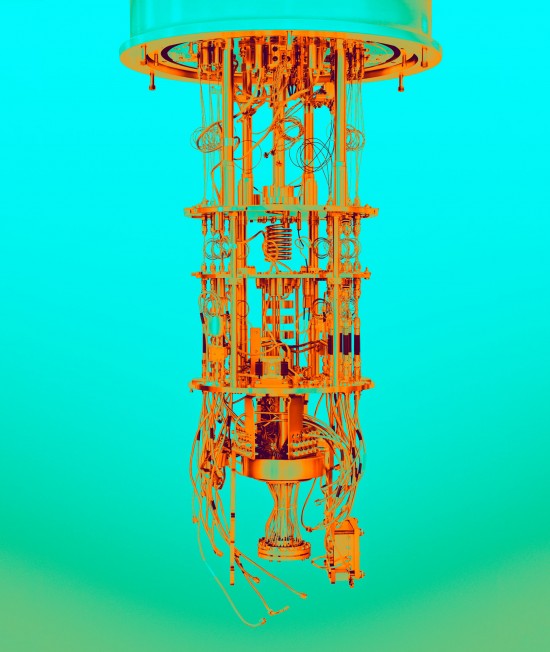

In terms of physics, the proposal is incredibly difficult to make work. Classical computers can’t simulate a universe like the one we inhabit because of quantum mechanics. Any classical theory that reproduces the results of quantum theory in terms of classical physics has to include non-locality, which violates Lorentz symmetry. Another way to understand this model of reality is that our world, the laws of nature, space-time, matter and energy are composed of qubits instead of classical bits, and encoded on an algorithm in a quantum computer.

Quantum computers work according to Shor’s algorithm rather than classical physics, but even in this more elaborate proposal, Lorentz invariance can’t be preserved. If fundamental physics is discrete and space and time are built up out of pixels like a software program, or one observer detects a unit of space and time that are elementary with respect to that observer’s frame of reference, then another observer travelling close to the speed of light will see those length scales further Lorentz contracted. Which violates the principle of relativity.

Moreover, if this picture of spacetime were true then the information encoded in a region of space ought to be a sum of the individual atomical components that make up that region.

If space and time can’t be simulated either classically or on a quantum computer that makes the first premise false which is enough to defeat the argument.

Some commentators think that the first premise could be false for another reason, namely that consciousness can’t be simulated. There could be something to this argument, as well. Bostrom assumes "substrate independence" but the fact that I can simulate something looking similar to rain on a desktop doesn’t mean that the computer program itself is wet inside. The section of Bostrom’s paper that deals with this part of the argument only assumes it as a given but several approaches to the philosophy of mind (e.g., Searle, Descartes, Plantinga, or Putnam) are inconsistent with functionalism.

Even granting this assumption that you could simulate consciousness neuron-by-neuron each person is an individual that can’t be "copied and pasted". Which makes it hard to believe that simulated observers will outnumber real observers.

There are other contentious philosophical assumptions made in the argument. If simulated words are able to create their own simulations, then they won't have access to as much computing power as their forebearers did. So the worlds they create will necessarily have lower resolution, and at the lowest level that results in worlds that are simulated but don't themselves have the ability to run simulations. Moreover, we are most likely in one of these worlds, since they are the most plentiful, this obviously makes the argument contradictory since it starts with "we can run simulations of our past" and concludes with, we are most likely living in a world where we can't run ancestor simulations.

There are still other fairly obvious problems with the argument "why doesn't the computer simulation crash?" or "why waste computer power simulating anything outside of our galaxy?". In theory, you could put several holes in the argument but I think I've written enough to illustrate why I have a hard time taking the simulation hypothesis seriously.

There are other contentious philosophical assumptions made in the argument. If simulated words are able to create their own simulations, then they won't have access to as much computing power as their forebearers did. So the worlds they create will necessarily have lower resolution, and at the lowest level that results in worlds that are simulated but don't themselves have the ability to run simulations. Moreover, we are most likely in one of these worlds, since they are the most plentiful, this obviously makes the argument contradictory since it starts with "we can run simulations of our past" and concludes with, we are most likely living in a world where we can't run ancestor simulations.

There are still other fairly obvious problems with the argument "why doesn't the computer simulation crash?" or "why waste computer power simulating anything outside of our galaxy?". In theory, you could put several holes in the argument but I think I've written enough to illustrate why I have a hard time taking the simulation hypothesis seriously.

Comments

Post a Comment