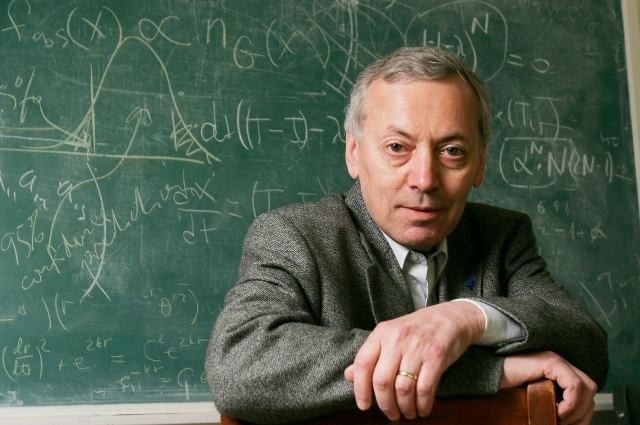

Alexander Vilenkin, one of the major contributors to the field.

Quantum cosmology is in an incredibly infantile and esoteric branch of theoretical physics; with a whole series of practical and conceptual problems. Christopher Isham argues that because of this, no one is really sure whether quantum cosmology is even a valid branch of science, or whether the whole project is just misconceived.

The unique claim of quantum cosmology isn't just that quantum theory applies to things within the universe but to the universe itself, particularly when the universe was small enough at the Big Bang.

The first and third most popular posts I wrote on this blog were about the Hartle-Hawking and Vilenkin tunneling from "nothing" models, both of which are proposals for a quantum state of the universe (quantum cosmologies). Having read over these again and in light of Hawking's death I thought it might be useful to explain and contrast these in a single post, a bit more.

If I throw a sharpie across a lecture theater its trajectory will be determined by (a) it's initial staring position, and its initial velocity and (b) Newton's second law of motion. Newtonian mechanics is consistent with a wide variety of possible trajectories the object could travel. Similarly, Einstein's field equations allow for a large number of possible histories that could emerge from an initial singularity, the theory itself cannot select among these variants.

In a standard quantum mechanical experiment the experimenter determines the boundary conditions with how they set up the physical system but in quantum cosmology the quantum mechanical system is the universe, itself. There is no experimenter "outside" the universe, so instead we have to postulate some new independent law which constrains the initial (singularity-free) state of the universe.

Another way of putting this is that while the equations of general relativity allow for a family of exact solutions, quantum cosmology does not, there is only one solution to the basic equations and boundary conditions. This is what some quantum cosmologists mean when they claim to predict a unique universe, there may of course still be approximate solutions but these themselves will not be solutions.

This still leaves many exotic possibilities for the fantasies of theoreticians but it also has some obvious practical limits, particularly because of the cosmic no-hair theorem and inflation. Whatever the initial state of the universe inflation will 'smooth' it out to resemble something like our universe, if you set the energy density curve just right. How then, do we retro-dict back into the past to determine what the initial state was? This is made even worse if eternal inflation turns out to be the correct version of inflation.

Furthermore, how do you in some non-arbitrary way set the boundary conditions for what the universe should be? The answer depends on the model under consideration but it's important to keep in mind that there are several possibilities, which means any one proposal is enormously speculative. Stephen Hawking and James Hartle have, probably the most interesting response to this second problem.

Most approaches to quantum cosmology (including all the ones I discuss here) are characterized by the sum over histories approach. Where the wave function of the universe for a given metric and matter field is determined by integrating over all 4-dimensional geometries that obey the boundary condition of that model, this equivalence has only ever been shown for simplified models.

Hartle-Hawking Wave Function

The "boundary condition" of the Hartle-Hawking model is that there are no boundary conditions, hence the phrase the "no boundary proposal" the universe is determined at every point by its laws, particularly the quantum state and the Hamiltonian. The initial state $\left | \psi _{HH} \right \rangle$ is calculable by the same path integral as the unitary operator that governs its evolution.

Hartle-Hawking split the wave function into two components, one component oscillates (which describes the universe of our experience) and the other grows exponentially. If you were to try to give the exponential region any geometric interpretation it would correspond to a 4-dimensional sphere, where imaginary numbers have replaced real numbers for he time variable. So consider the metric for a 4-dimensional sphere

Where R is the radius, tau is the angle measured from the equator of the circle, and Omega-three is the metric of a unit sphere of one less dimension. If we change the sign of timelike variables, and cosine now becomes hyperbolic-cosine and you get the metric for de Sitter space.

This mathematical trick is not unlike what happens in the Hartle-Hawking model. They end up with a model that does not begin at a point, but collapses from large radi and then "bounces" at the Big Bang.

The model is very interesting but it turns out not to work, a paper by Don Page on the arXiv shows that it gives a very large probability to a de Sitter space much larger than what we observe.

Vilenkin tunneling from "nothing"

Vilenkin's model is similar to the Hartle-Hawking model but he instead sets some boundary conditions, these conditions are not however, on the universe itself but are a constraint on the configuration of all physically possible universes consistent with the wave function of our universe.

Vilenkin assumes that the universe can begin in real time and then adds the condition that only the expanding region of the model is allowed (his model has no contracting phase and no "bounce" unlike Hartle-Hawking). So that the universe could begin at a zero dimensional point, a mathematician's empty set, and then tunnel to the minimal radius of a de Sitter space $H^{-1}$ and begin to inflate to large size.

This model is a lot more confusing to me, I don't accept that the zero dimensional point is really nothing, and I know I'm not the only one to make this criticism. Gott & Li make the argument that when realistic energy fields, something like a certain scalar field we observe in the real universe, are included you should get a non-zero energy at the point. What's more, quantum theory always describes tunneling only between classical states, we have no knowledge of the kind of mechanism Vilenkin is proposing.

It's also not clear, at least to me that the model leads to a unique wave function, (in fairness this is not a problem unique to Vilenkin), you can imagine defining that zero dimensional point by shrinking a certain manifold down to zero size, so since there is an infinite number of ways to do that you get an infinite number of wave functionals. Though, it's possible that I've just misunderstood the model (perhaps someone should ask AronRa).

General comments

I've already talked about some of the practical limitations of quantum cosmology, and some of the response has been to lower the bar on what counts as "science". At minimum an acceptable theory should be able to reproduce general relativity and quantum theory within appropriate limits but a more satisfying approach should be able to reproduce certain large scale features of the universe, as well as the type of matter contained with in it (and what it's doing).

In addition to these practical limitations, there a whole number of conceptual problems too. Probability cannot be the square of the modulus of the amplitude, of the wave function that would make the wave function of the universe non-renormalizable. Instead, at best, cosmologists reduce the Wheeler-DeWitt equation to the Hamilton-Jacobi equation using a semi-classical approximation and a WKB term for classical variables, within that limit time reemerges and it makes sense to talk about probability over classical spacetimes. The physical meaning of probability is still very illusive and not well understood.

A more basic problem has to do with the reduction of the state vector, talk of "collapsing" wave functions of the universe, even within something like the GRW interpretation is awkward at best. These are jump-like features of the theory that aren't described by the Schrodinger equation, though they're not problematic within a statistical interpretation of quantum theory. They correspond merely to the knowledge of a particular observer when measuring a system. However that could arguably still involve a dualism between observer and system, that may not reduce an act of measurement to the same simple quantum mechanical law as everything else.

Comments

Post a Comment